- April 10, 2024

- 12:12 PM

- 0

A threat actor is using a PowerShell script that was likely created with the help of an artificial intelligence system such as OpenAI's ChatGPT, Google's Gemini, or Microsoft's CoPilot.

The adversary used the script in an email campaign in March that targeted tens of organizations in Germany to deliver the Rhadamanthys information stealer.

AI-based PowerShell deploys infostealer

Researchers at cybersecurity company Proofpoint attributed the attack to a threat actor tracked as TA547, believed to be an initial access broker (IAB).

TA547, also known as Scully Spider, has been active since at least 2017 delivering a variety of malware for Windows (ZLoader/Terdot, Gootkit, Ursnif, Corebot, Panda Banker, Atmos) and Android (Mazar Bot, Red Alert) systems.

Recently, the threat actor started using the Rhadamanthys modular stealer that constantly expands its data collection capabilities (clipboard, browser, cookies).

Proofpoint has been tracking TA547 since 2017 and said that this campaign was the first one where the threat actor was observed using Rhadamanthys malware.

The info stealer has been distributed since September 2022 to multiple cybercrime groups under the malware-as-a-service (MaaS) model.

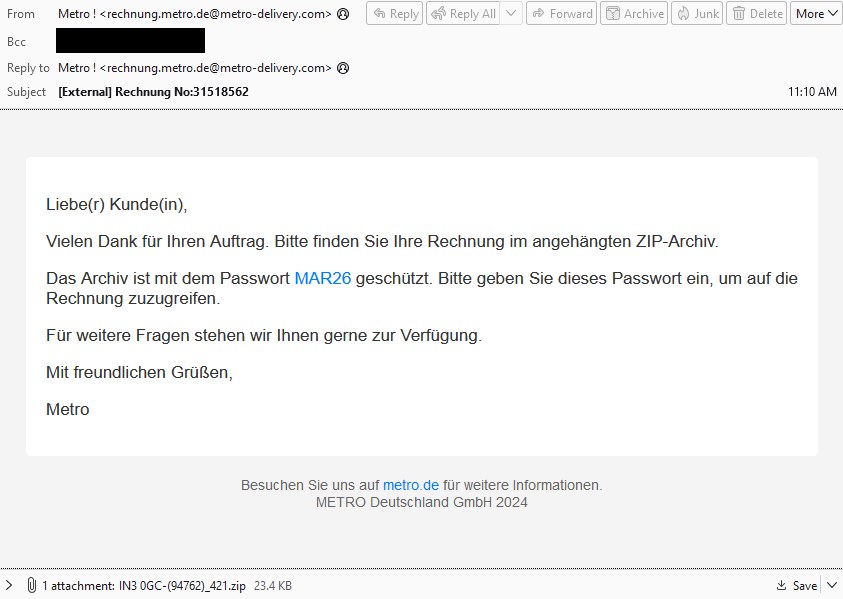

According to Proofpoint researchers, TA547 impersonated the Metro cash-and-carry German brand in a recent email campaign using invoices as a lure for “dozens of organizations across various industries in Germany.”

source: Proofpoint

The messages included a ZIP archive protected with the password 'MAR26', which contained a malicious shortcut file (.LNK). Accessing the shortcut file triggered PowerShell to run a remote script.

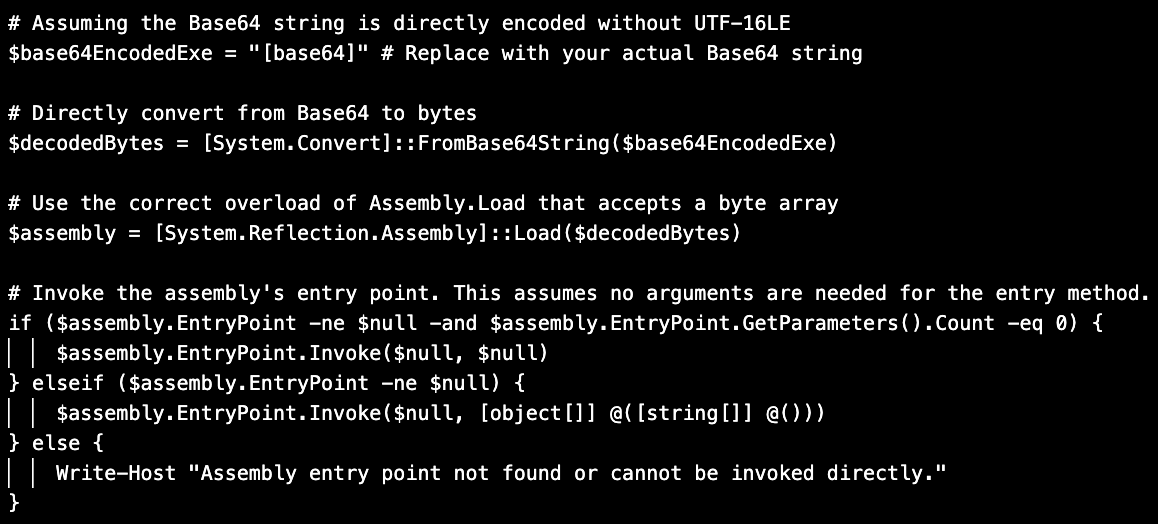

“This PowerShell script decoded the Base64-encoded Rhadamanthys executable file stored in a variable and loaded it as an assembly into memory and then executed the entry point of the assembly” - Proofpoint

The researchers explain that this method allowed the malicious code to be executed in memory without touching the disk.

Analyzing the PowerShell script that loaded Rhadamanthys, the researchers noticed that it included a pound/hash sign (#) followed by specific comments for each component, which are uncommon in human-created code.

source: Proofpoint

The researchers note that these characteristics are typical to code originating from generative AI solutions like ChatGPT, Gemini, or CoPilot.

While they cannot be absolutely certain that the PowerShell code came from a large language model (LLM) solution, the researchers say that the script content suggests the possibility of TA547 using generative AI for writing or rewriting the PowerShell script.

Daniel Blackford, director of Threat Research at Proofpoint, clarified for BleepingComputer that while developers are great at writing code, their comments are usually cryptic, or at least unclear and with grammatical errors.

"The PowerShell script suspected of being LLM-generated is meticulously commented with impeccable grammar. Nearly every line of code has some associated comment," Blackford told BleepingComputer.

Additionally, based on the output from experiments with LLMs generating code, the researchers have high to medium confidence that the script TA547 used in the email campaign was generated using this type of technology.

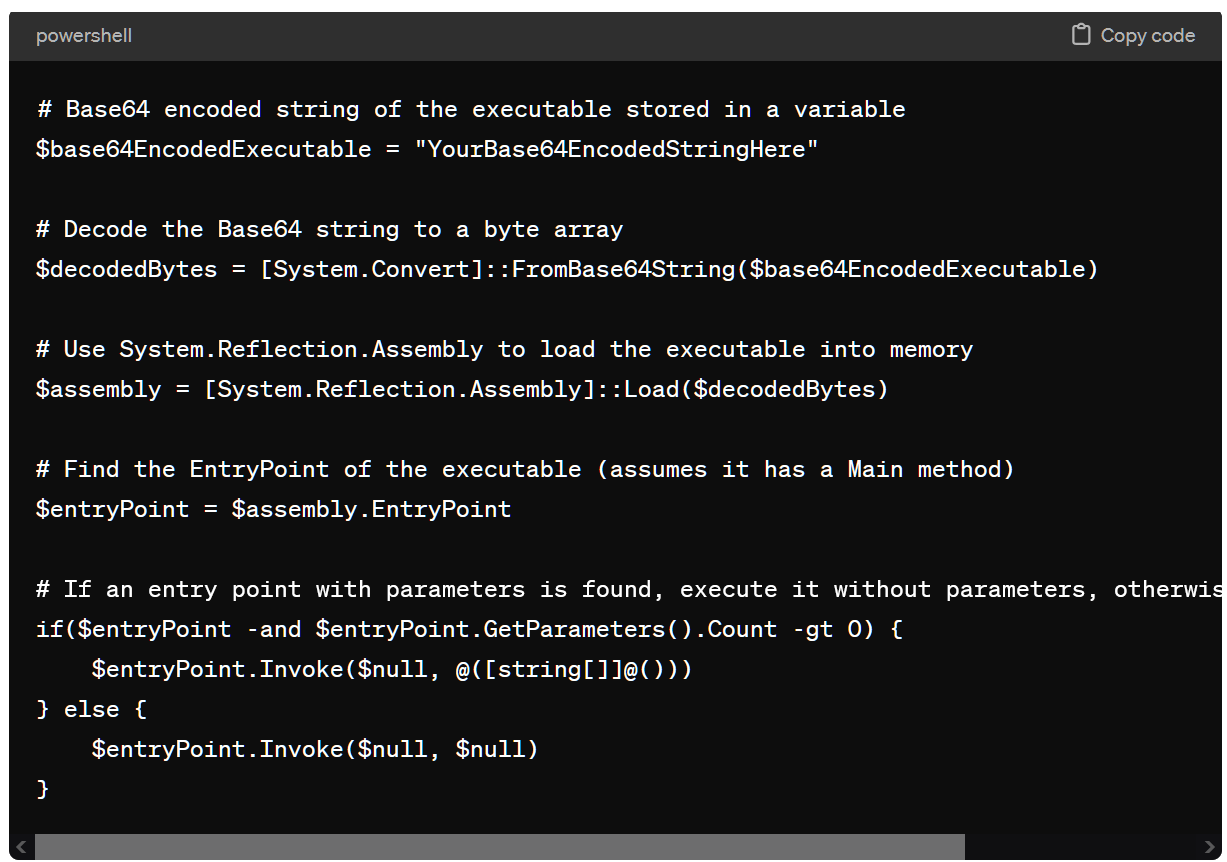

BleepingComputer used ChatGPT-4 to create a similar PowerShell script and the output code looked like the one seen by Proofpoint, including variable names and comments, further indicating it is likely that AI was used to generate the script.

source: BleepingComputer

Another theory is that they copied it from a source that relied on generative AI for coding.

AI for malicious activities

Since OpenAI released ChatGPT in late 2022, financially motivated threat actors have been leveraging AI power to create customized or localized phishing emails, run network scans to identify vulnerabilities on hosts or networks, or build highly credible phishing pages.

Some nation-state actors associated with China, Iran, and Russia have also turned to generative AI to improve productivity when researching targets, cybersecurity tools, and methods to establish persistence and evade detection, as well as scripting support.

In mid-February, OpenAI announced that it blocked accounts associated with state-sponsored hacker groups Charcoal Typhoon, Salmon Typhoon (China), Crimson Storm (Iran), Emerald Sleet (North Korea), and Forest Blizzard (Russia) abusing ChatGPT for malicious purposes.

As most large language learning models attempt to restrict output if it could be used for malware or malicious behavior, threat actors have launched their own AI Chat platforms for cybercriminals.

Update [16:40 EST]: Added clarifications from Daniel Blackford, director of Threat Research at Proofpoint, received after pubilishing time.